Designing for Trust: Introducing Confidence Indicators in Loopio’s AI Workflow

I led the design of a system of trust indicators, starting with a Confidence Score, to increase adoption of AI-generated answers in enterprise workflows. Through research, competitive analysis, and cross-functional workshops, we identified five key answer qualities and introduced natural language explanations users could rely on. This shift toward interpretable trust led to higher user confidence, reduced rework, and improved adoption of AI tools across the platform.

Company: Loopio Role: Design Lead Project Length: 2 months

Problem

As Loopio integrated AI into its unified answers experience, a consistent barrier emerged: enterprise users wouldn’t adopt automation unless they could trust the output. Accuracy, auditability, and compliance were more important than raw speed—especially for proposal managers and SMEs working on customer-facing RFPs.

Even when users were impressed by automation, they hesitated without understanding:

Where did this come from?

How reliable is it?

Can I defend this answer to a stakeholder?

To move forward, we needed to build transparency into automation—proactively surfacing signal, source, and rationale, not just results.

Competitive Analysis

To understand how others approach AI trust, I audited both direct competitors (Responsive, AutoGen, Proposify, 1up, AutoRFP) and industry leaders (GitHub Copilot, ChatGPT, Perplexity, Notion AI, Google Workspace AI).

Key gaps identified:

Confidence signals were often hidden or overly technical

Explanations were vague—typically just a percentage or score

Most systems weren’t built for enterprise evaluators or proposal teams

This clarified the opportunity: design a clear, user-facing trust layer, written in the language proposal teams already use—not built for engineers.

Collaborative Workshops

I facilitated design-only workshops where designers explored and brainstormed trust markers relevant to the parts of the platform they owned. This focused approach helped generate diverse, user-centered ideas tailored to specific content domains like AI-generated versus library answers.

These sessions laid the groundwork for prioritizing trust indicators that could be designed and shipped quickly to deliver meaningful user value.

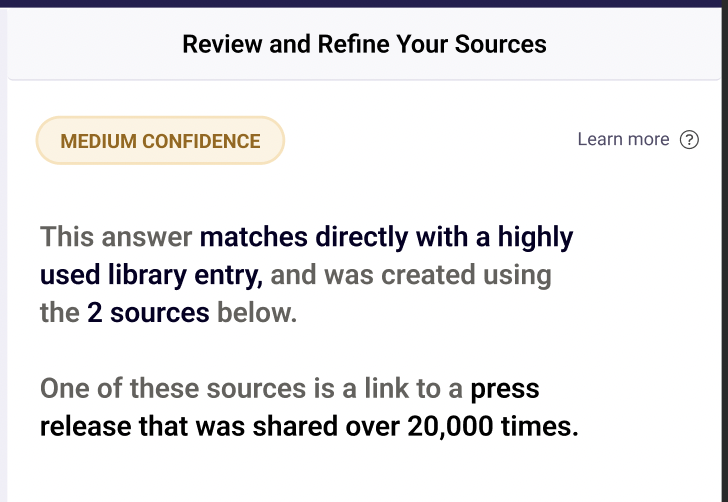

Research Findings: Trust Through Transparency & Confidence

We interviewed 7 GenAI users from Enterprise and Mid-Market segments, selected via CSM and Salesforce data. To ensure rigor, dual AI assistants analyzed the data independently, cross-validating insights.

Key Insight:

All participants emphasized the need for traceability—clear visibility into AI-generated answers—and interpretable confidence scores to build trust. Without these, users won’t adopt the unified AI experience. As one user said, “We are control freaks... we need to track where it comes from.”

Design implications:

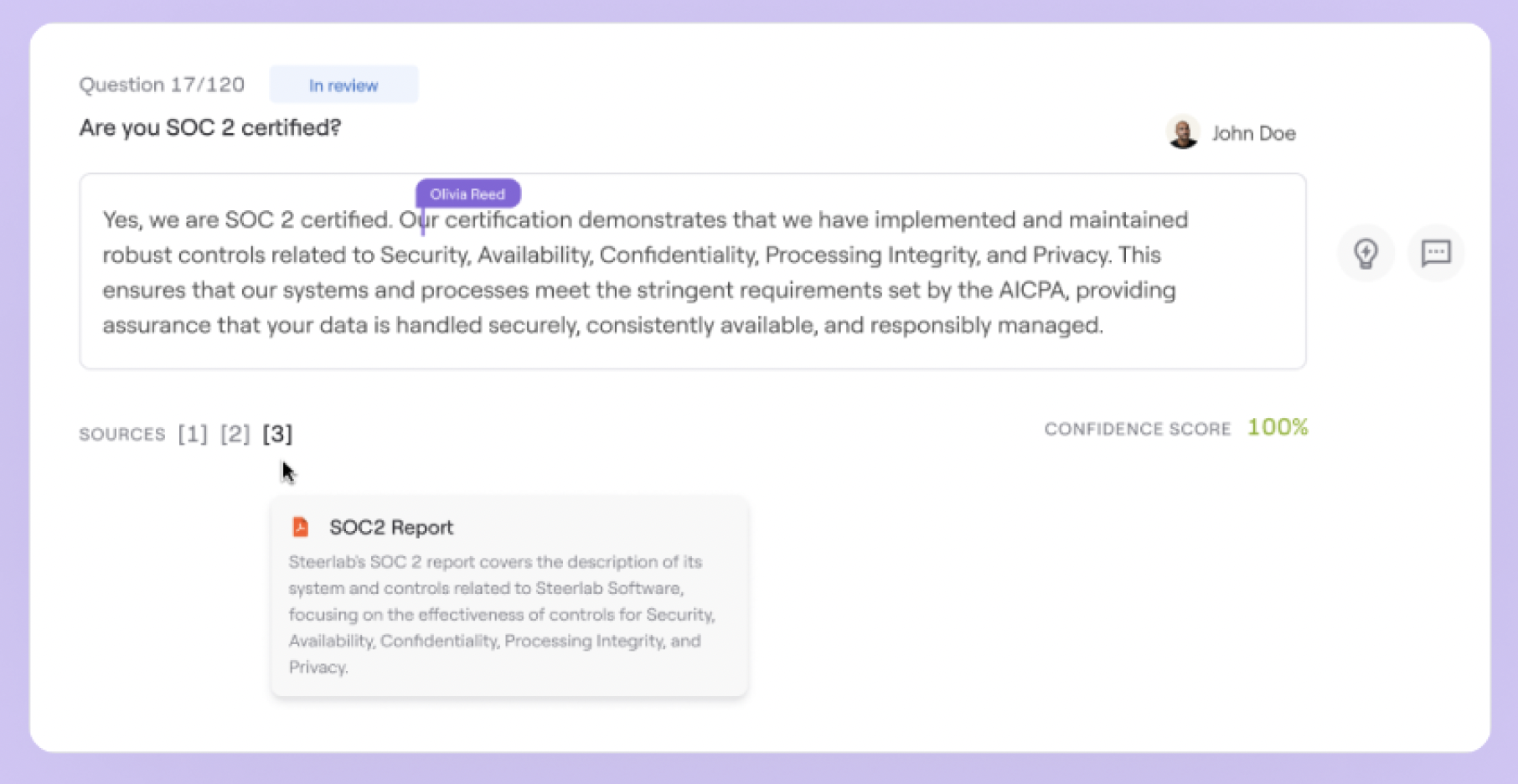

Traceability is essential.

Confidence scores must explain why an answer is given, ideally through natural language rather than just numbers.

Users want control over AI content transformation with clear labeling.

This informed our design of trust indicators to meet enterprise users’ transparency and control expectations.

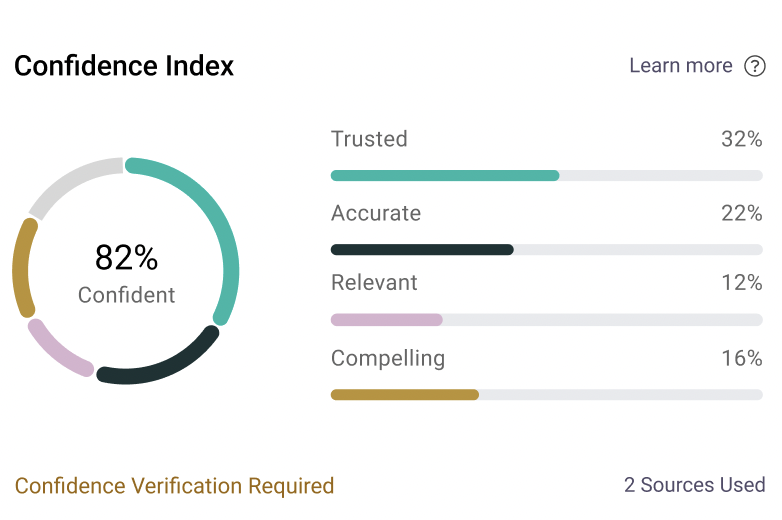

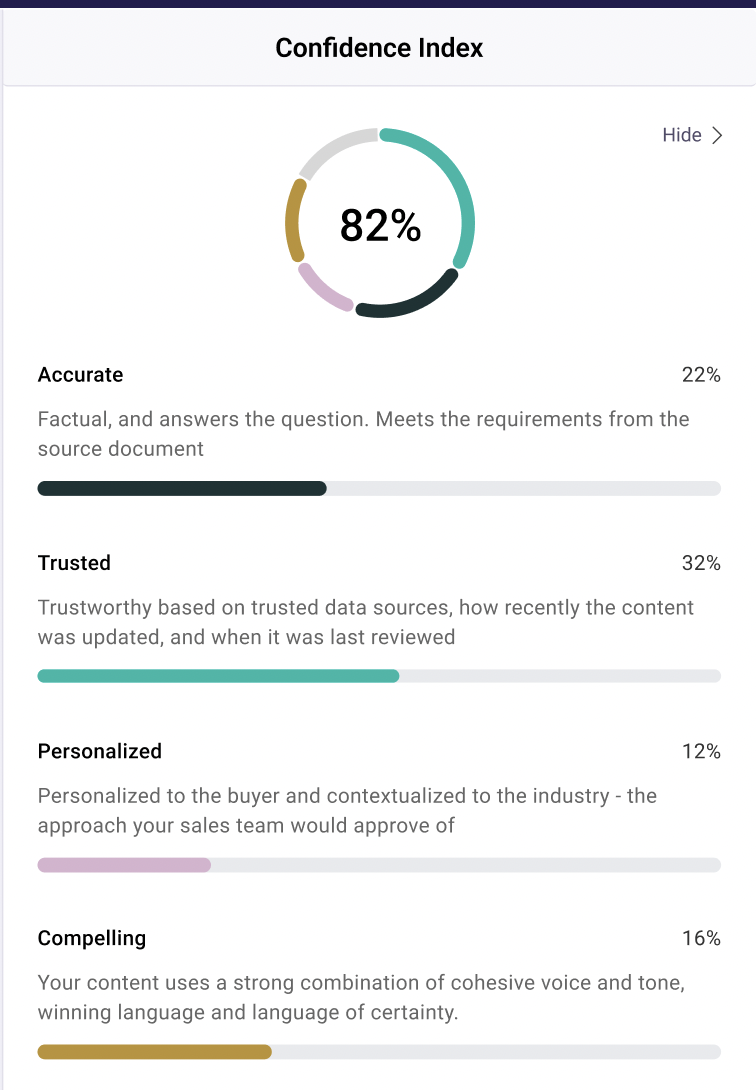

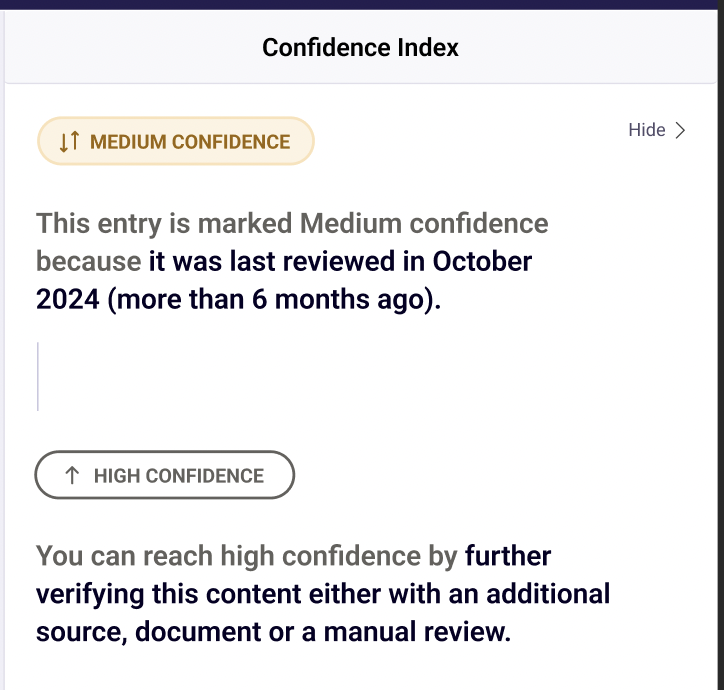

Designing the Confidence Score

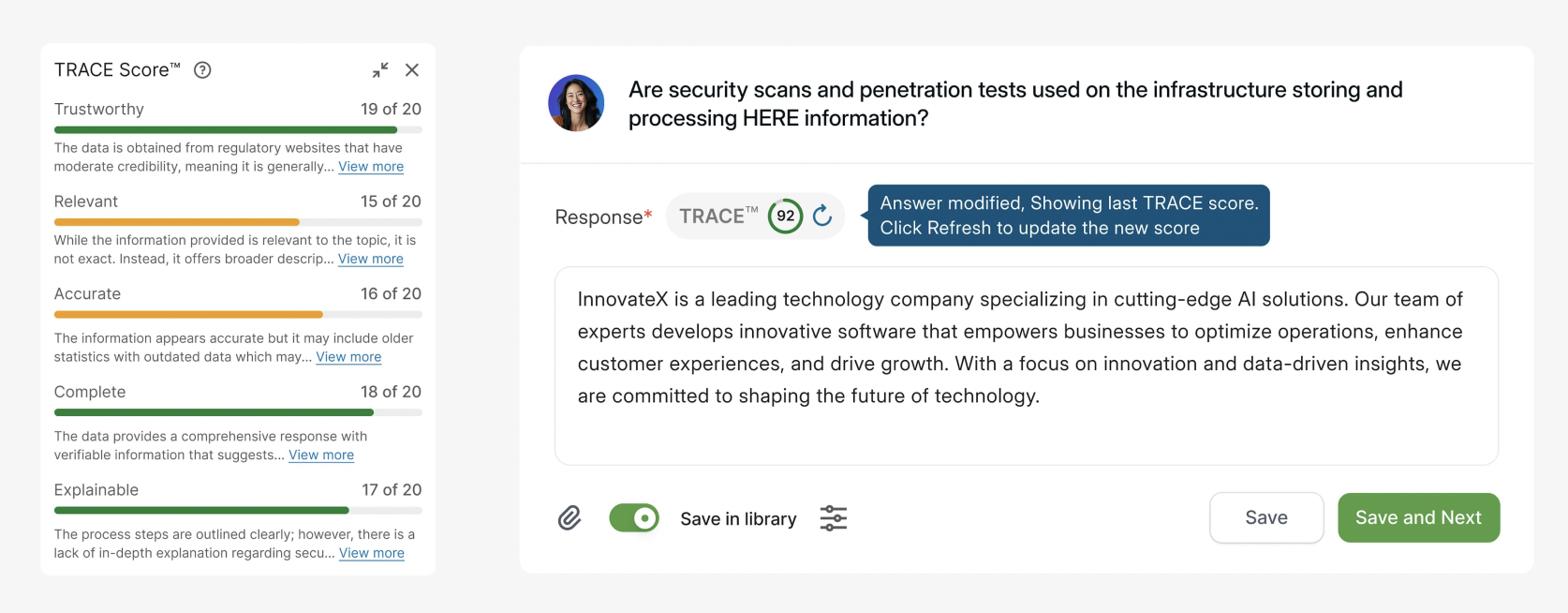

I led the naming exploration for the Confidence Score in close collaboration with Brand and PMM teams, ensuring it resonated both strategically and with users. I framed and designed the UX around five core qualities that users identified as most important:

Trustworthy – factually and ethically sound

Accurate – sourced and contextually relevant

Compelling – persuasive and well-written

Personalized – aligned to the organization’s voice

Competitive – positioned to win against alternatives

Instead of scoring each trait separately, we developed a summarization model that delivers a clear, plain-language explanation—for example, “This answer is accurate and compelling but may not be fully personalized to your company’s tone.”

This approach resonated most in testing with users, providing them with quick, transparent insights without obscuring the reasoning behind the score in a black-box number.

Solution

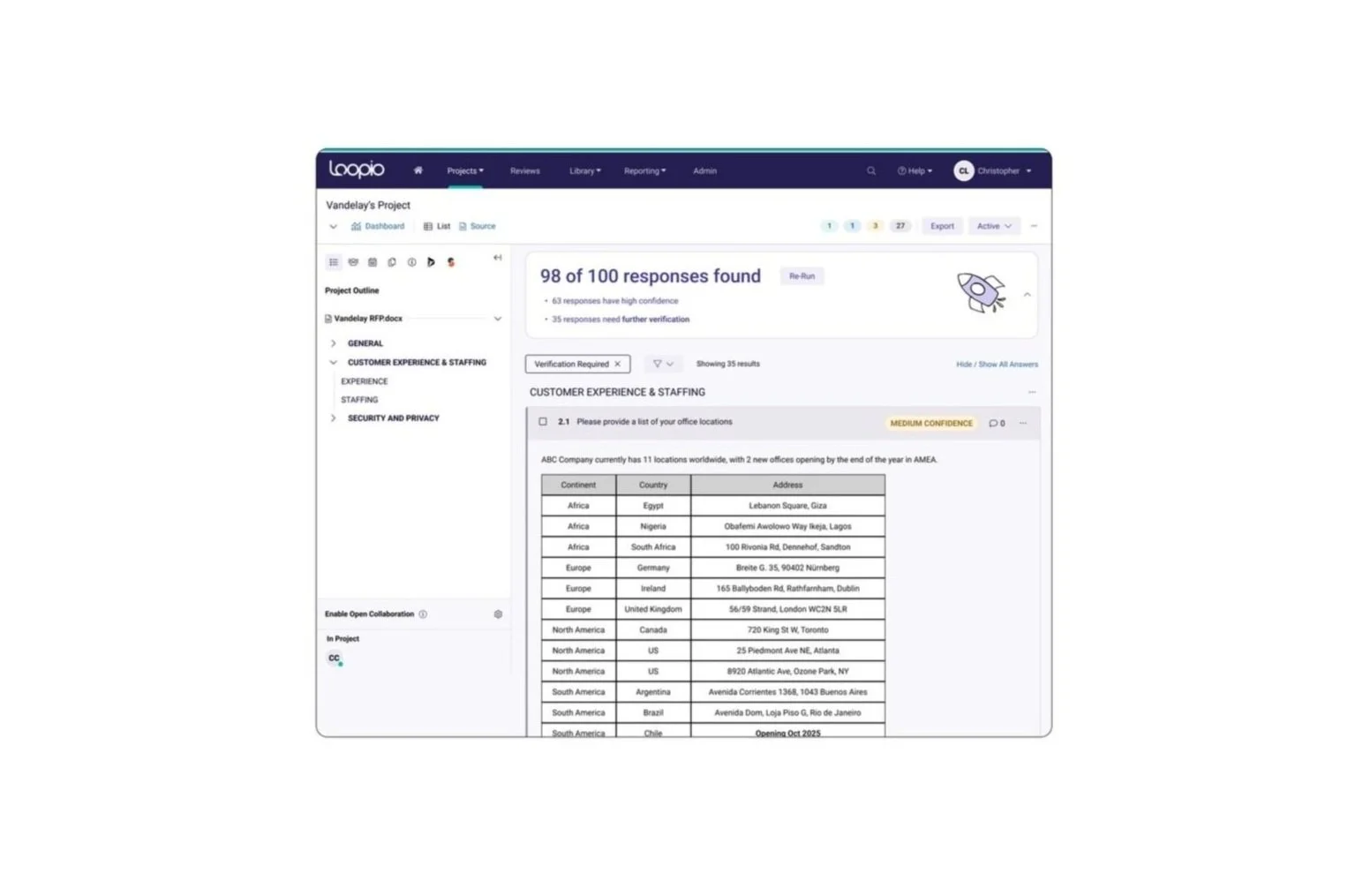

We shipped the first version of the Confidence Score as part of Loopio’s AI answer workflow:

✅ Plain-language confidence explanations

✅ Visible citations for traceability

✅ Editable sections so users could improve weaker areas

✅ Role-based permissions for trust controls at scale

This became the first in a broader system of trust markers, designed to scale alongside Loopio’s AI strategy.

Impact

Early signals were strong:

70%+ interaction rate on Confidence Score tooltips in the first 4 weeks

85% of pilot users said the explanations increased their trust in AI-generated content

30% drop in rework reported by proposal managers